Azure vWAN experience

Working with Azure Virtual WAN has made me a better person. I have found a new level of patience and acceptance of things I can't control. The vWAN is one of Microsoft's primary network solutions when dealing with larger environments. It's meant to be one place or service, where you connect everything network related. VPN gateways, Expressroute, vNETs, client VPN and probably more by the time you read this.

This is my experience with the vWAN and some of the issues we face may be unique to our environment due to particular configurations. It's just a minor collection of joy working with Azure, that I started plotting down a week ago.

How easily can I trip the vWAN?

3 components. A vNET, a peering and the vWAN. I can cause the vWAN to go into configuration failure by removing one component incorrectly. This has been an issue during all the time I've worked with the vWAN.

One of the architecture ideas with automation in Azure, is to hand over deployment of resources to other teams. A team deploying services or servers, which require a new vNET, would like to be able to do so, without going through some network person. They only need permissions in their own resource groups to be able to deploy the vNET (and delete it again). Now the vWAN introduces 2 issues.

- I can cause the entire vWAN hub for my region to go into configuration failure by removing the vNET before removing the peering. Disclaimer: I'm a profesional at causing Azure network failures, don't try this at home.

1. Create a new vNET.

2. Peer the vWAN to the vNET. It has to be peered from vWAN side.

3. Delete the vNET without deleting the peering.

Aaand it failed.

This does nothing operationaly to the vWAN. It still forwards traffic, but making any further changes is locked until the failed state has been resolved. It's easy to fix this, I just have to remove the peering from the vWAN and it returns to normal. We can avoid this problem, by having the the team, that deployed the vNET, make sure their automation deletes the peering first when decomissioning. Just a tiny caveat with the vWAN. To make changes to the vWAN, a user is required to have permissions to all subscriptions, that are in any way peered or configured with the vWAN. Whether this is an issue depends on how permissions and policies are applied, but I don't think anyone would consider this to be smart :)

That's just a small stability quality test of the vWAN. It get's better (worse).

Microsoft, routing and documentation

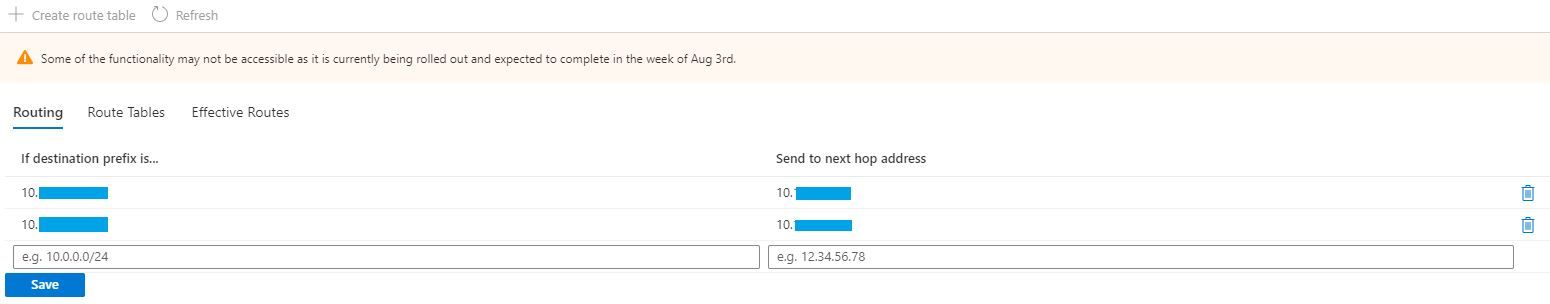

One of the primary goals for the vWAN is to help with vNET routing and provide dynamic routing through BGP and gateways. We arrived at a necessity to build a DMZ using an NVA (VM firewall), which requires modifying route tables to ensure traffic traversing the firewall. Fortunately, the vWAN has a module for specifying static routes. At least that's what it looks like?

Small note on the warning in the picture about "some of the functionality may not be accessible" until the week of August 3rd. Current date is 10th July. More on this later.

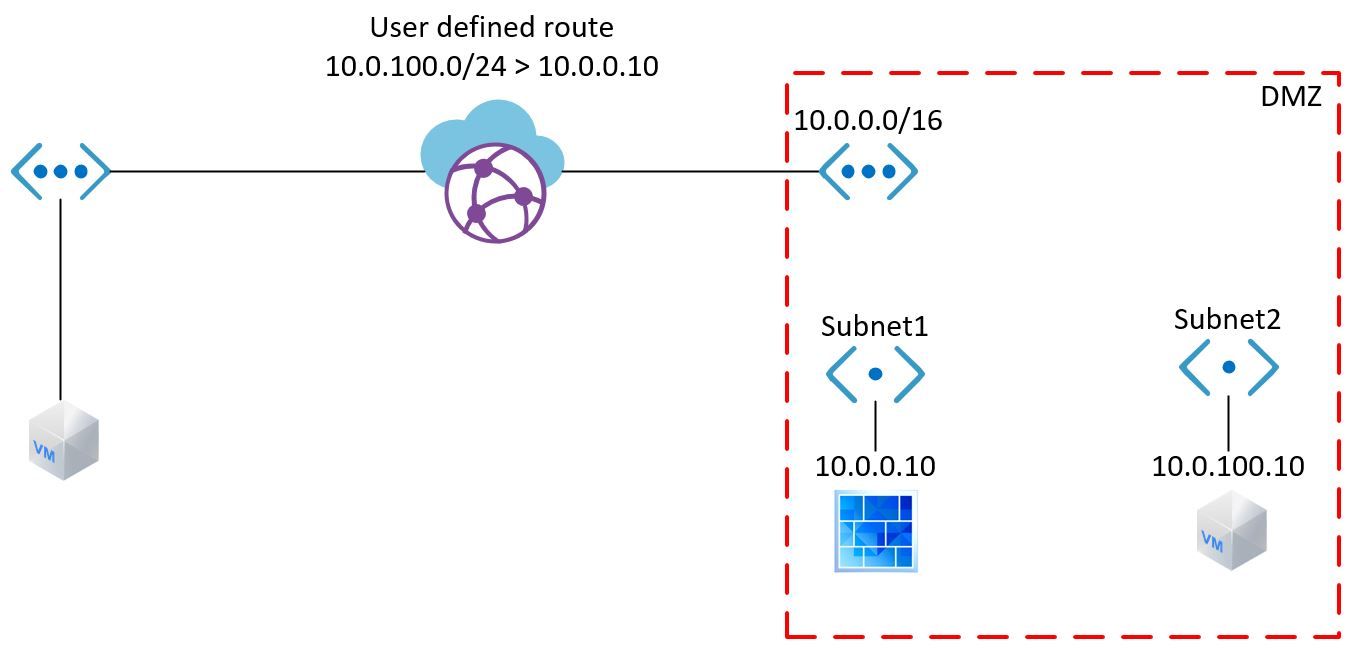

With an understanding of what is needed for a DMZ in Azure, I deploy the following environment:

I spent the better part of a month and wrote a small novel worth of emails between Microsoft tech. Something just didn't work in the environment. We experienced asymetric routing, which didn't make sense, given there only was one path in and out. Turns out, we actually had experienced the same issue previously, but it was written up to the vWAN being in "kind of beta".

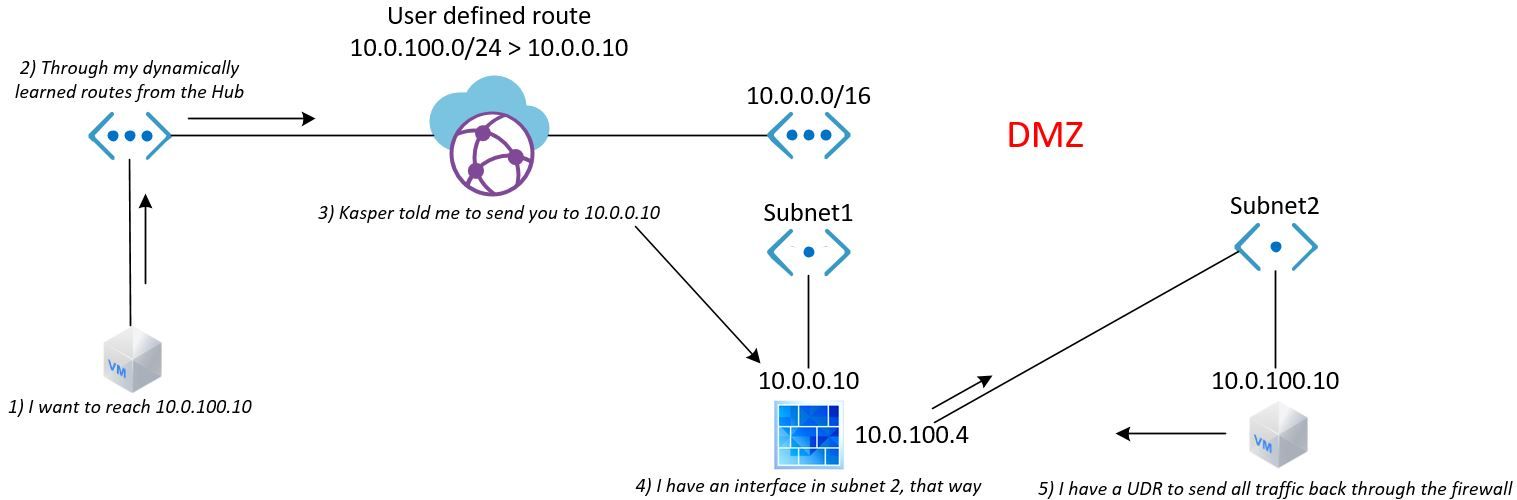

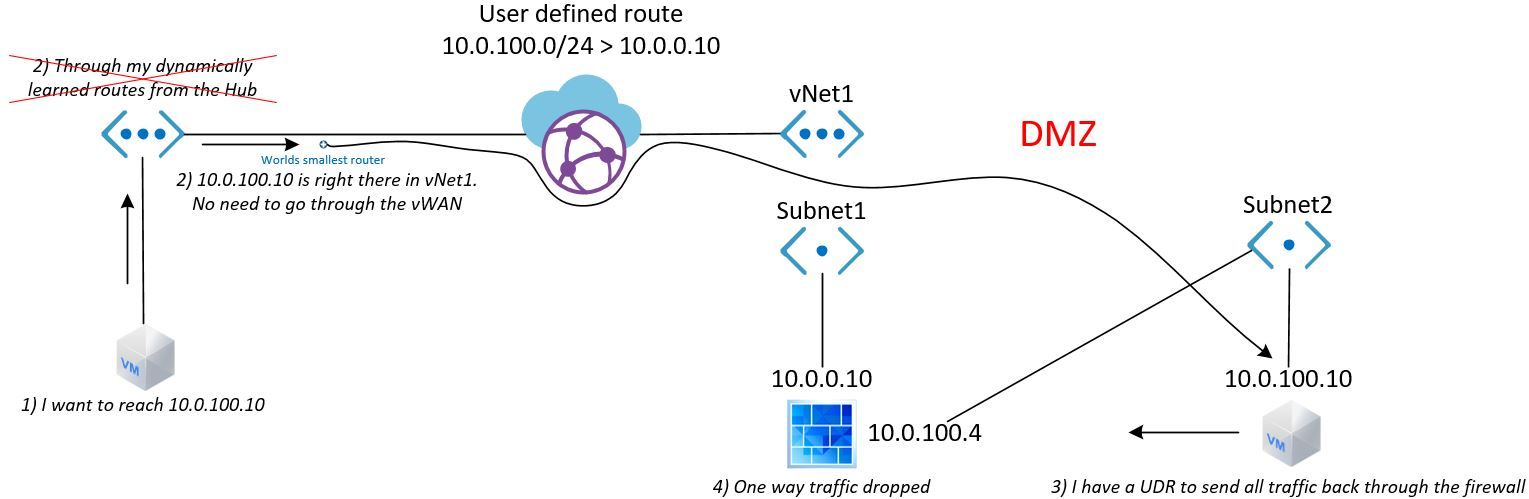

The following image illustrates what I expected to happen with the DMZ environment

However, there are (probably) 2 issues encountered.

- The UDR / Static route in the vWAN does not work between vNET to vNET routing. This I had confirmed by Microsoft engineers and one day, some year, the documentation will be updated. The UDR table is only evaluated for traffic passing through a gateway (Expressroute Gateway, VPN Gateway).

- The UDR routes are not propagated from the vWAN to BGP peers or vNET routing tables. I don't see the UDR in my BGP table of on-prem devices. If I query the effective routing table of the vWAN, the UDR routes are not present.

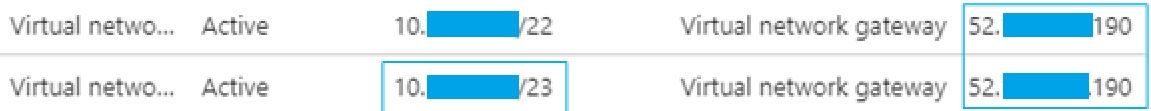

The expectation of the vWAN behaving like a router is my mistake. The following image is a snippet of effective route table of a VM

The bottom entry is for the prefix, that is supposed to be affected by the UDR in the vWAN and the top entry is just a dynamic route. What's curious is they both have the same vNET gateway. This gateway is an "unknown" router to me as the customer. I don't have any information about this gateway or what kind of entity it is. I've come to the assumption, that it's a router "between" the vNETs and the vWAN. The following illustration is what I imagine is happening:

With the conclusion that it doesn't work with UDR in vWAN, how can I implement a DMZ? You can implement a UDR for ALL VMs, making sure the entry for the DMZ is there. Really bad idea. Way too much work and would put network firewall segmentation in the hands of individual VM route tables. Our DMZ doesn't work with the vWAN in this setup.

We created IPsec VPNs between our DMZ and the vWAN, basically deploying a new network environment next to the vWAN.

Without the vWAN, this is how Azure architects a DMZ:

https://github.com/microsoft/Common-Design-Principles-for-a-Hub-and-Spoke-VNET-Archiecture

vWAN, ExpressRoute, Gateways and BGP

Warning: You may experience discomfort discovering how Microsoft does BGP.

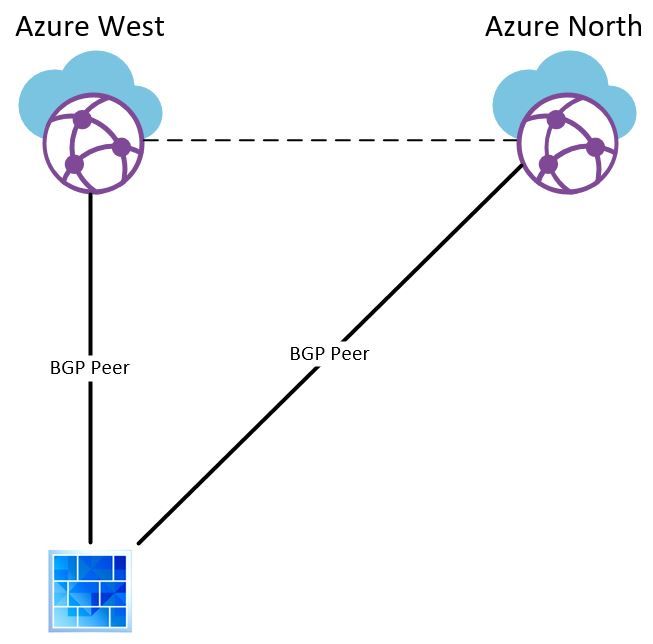

Starting simple. One BGP peer from our on-prem datacenter to each Azure region in Europe:

This was our first gateway implementations to the vWAN. Nothing strange and everything worked as expected. The peering is between 2 private ASN and there is an Azure backbone connection between the regions.

Maybe the only odd thing was when the West region inexplicably started routing across North to our on-prem and incurred twice the latency and it took Microsoft more than a week to resolve the issue. But who cares about latency.

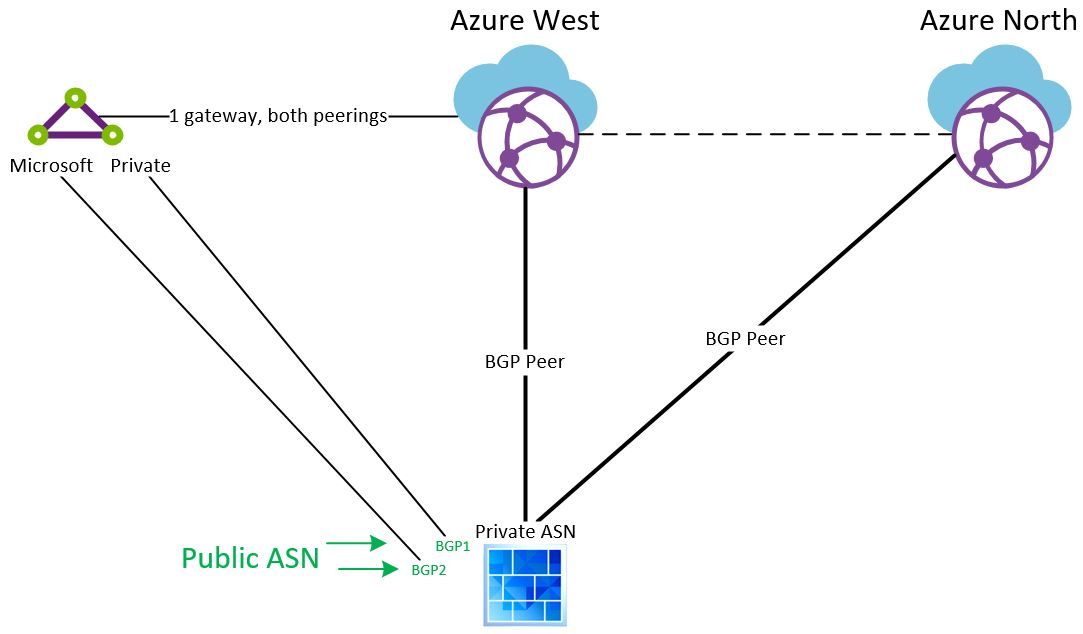

Next up, let's add the ExpressRoute to our setup. Just some more details on the topology:

The Microsoft peering requires a public ASN and since the private peering is delivered on same circuit, we used our public ASN for the Private peering as well.

The ER gateway has to be configured to peer with the vWAN. There is actually only "one" connection when this is configured, but would that matter? That will be my lower back pain.

May as well just view the routing table.

*10.x.x.x/24 185.118.x.x 185.118.x.x - - - xxxx,12076

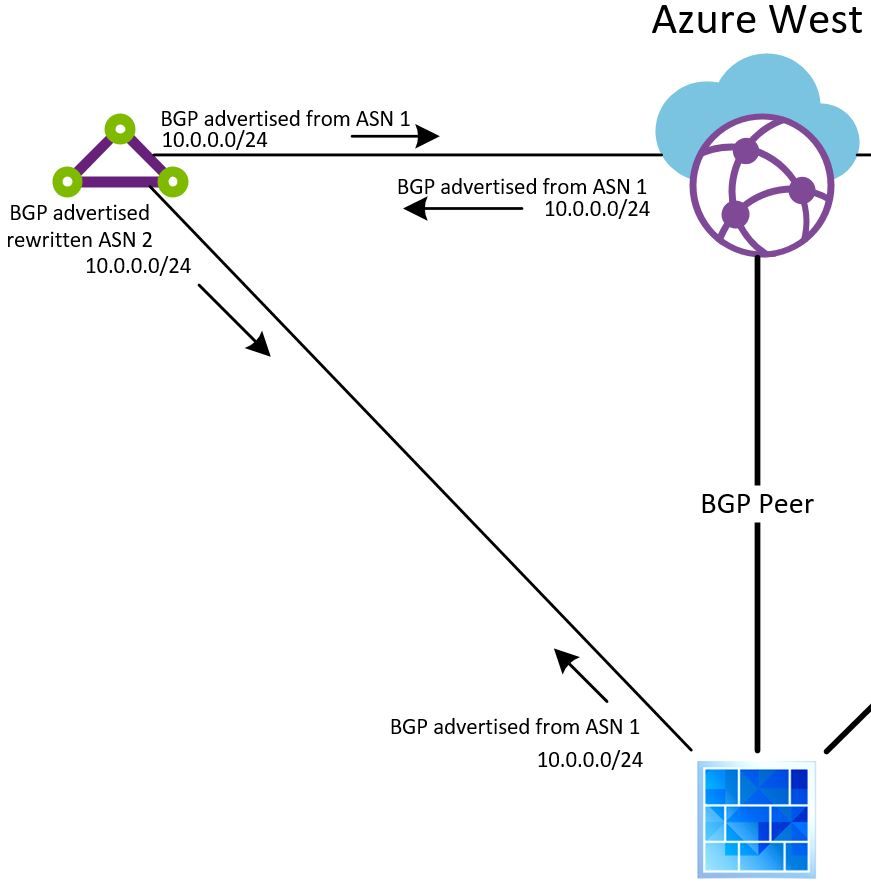

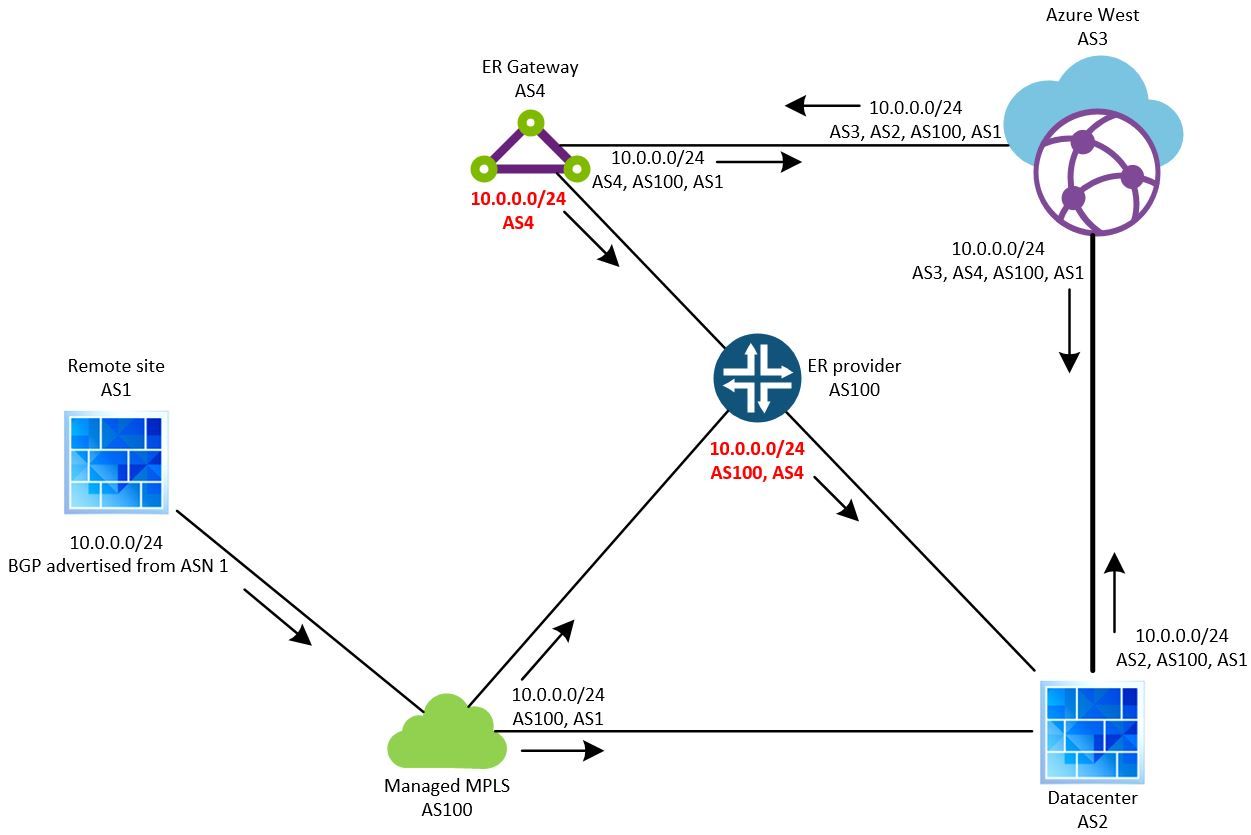

That's a route from the Private peering of a network originating in the vWAN. The problem here is the AS path, which "originates" with Microsoft's public ASN. That is not the ASN of our vWAN, which is a private ASN. It's the same AS path for ALL networks coming from the ER peer. It's rewriting the AS path. How will this kill our network some day?

- Our on-prem routes advertised through IPsec (failover from ER) to the vWAN are advertised back to us with a shorter path and without our originating AS.

- The ER gateway route table and vWAN route table are 2 different entities. The vWAN SHOULD always prefer the ER Gateway. This makes sense, except when the BGP advertisements work like the following:

3. The advertisements can end up looking like that, because the vWAN doesn't automatically failover from VPN to ExpressRoute. This is the "should" from above. If the routes are advertised through the VPN Gateway and in the vWAN route table, then advertisements from the ER Gateway won't take effect until I withdraw them or prepend my AS enough times for the ER to be a shorter path. This is not in line with documentation:

1. ExpressRoute (ER) over VPN: ER is preferred over VPN when the context is a local hub. Transit connectivity between ExpressRoute circuits is only available through Global Reach. Therefore in scenarios where ExpressRoute circuit is connected to one hub and there is another ExpressRoute circuit connected to a different hub with VPN connection, VPN may be preferred for inter-hub scenarios.

2. AS path length.

What I'd expect from the above scenario, is the ER gateway ignoring the advertisement from Azure West, as long as it has the advertisement from on-prem. However, I know this isn't the case, because I've received the routes inbound from the ER to on-prem. Obviously, we apply filters to ignore these routes, but this speaks volumes about Microsoft's quality control of networking features.

Back to the point about the ER gateway rewriting AS path and only having one connection to the vWAN.

The Private peering works with private ASN, but the Microsoft peering requires a public ASN and IP prefix. For the Microsoft peering it makes sense to rewrite the AS path, can't have private ASN through public routing, but it makes no sense for the Private peering. I would wager, it's the same routing engine/gateway/whatever you want to call it, that is handling both the Private and Microsoft peering. That is why the Private peering is rewriting the AS as well. So much complexity introduced for such a small reason and it becomes worse if involved with a managed MPLS cloud through the Expressroute. Illustration:

I know what you're thinking. No way the ER gateway is advertising the same network 2 ways like this. No way Microsoft is this bad at BGP. Now you're warned. Maybe I've saved you an aneurysm.

Due to a slight ISP error, our ER circuit MPLS was connected to our managed MPLS the first time we deployed the ER. We managed to bring down every site connected by MPLS.

Microsoft vWAN upgrade June 26th

Maybe it was the 26th, I don't know and I don't think Microsoft are sure either.

Friday June 26th, some of our developers started experiencing issues accessing Microsoft storage. These are services available through public IP addresses, either through the internet or the Microsoft peering.

Tuesday the 30th I receive the issue and my first thought is, the developers messed up their VMs or local route tables. I know, that's an unfair assumption. Access to services such as Microsoft Storage is routed directly out of a vNET through an "internet backbone" Microsoft provides. This can be changed by enabling "Forced Tunnelling", which is done by having a default route in the vWAN. We didn't and we don't have that.

I decide to query the vWAN route table (just because).

I open a support case with Microsoft and so it begins. Microsoft had rolled out an unannounced update to the vWAN, which overhauled a lot of features. Unfortunately, it seems to have just broken a bunch of stuff in the vWAN. This is a core network product. It's the heart of our Azure environment and Microsoft treats it like an afterthought.

What I found to be the issue with Microsoft Storage access, was the vWAN trying to route to the Microsoft peering through our on-prem datacenter. This is actually my mistake, as I had created BGP policies to not advertised the Microsoft communities from the ER, but I had disabled it earlier during a troubleshooting session. STILL, the Microsoft services are not supposed to use vWAN routes, except when forced tunnelling is enabled. I apply the filter, things start working again, but we discover the storage traffic is still flowing across the vWAN and then to the ER. Get this:

- The vWAN prefered our on-prem BGP connection, which is a longer path in every way, above the ER gateway path. We will never know why, because the vWAN route table was broken.

- The storage traffic is still flowing across our vWAN and we are paying for it routing through the ER as well, but we still do not have forced tunnelling enabled.

Taking a view at effective routes of a VM, the the public prefixes from the Microsoft peering are present. I'd make a qualified guess and say that's why the vWAN is being used for ER services. Problem is, there are no filters available from the ER gateway to vWAN. I can't remove those routes from the vWAN route table. I'm just stuck with this until Microsoft figures out a fix.

Monday July 6th an update for the vWAN was supposed to be pushed to revert the route table view and fix that issue, according to Microsoft. It did not happen. But they did announce a change, at least. https://azure.microsoft.com/en-us/updates/azure-virtual-wan-multiple-capabilities-and-new-partners-now-generally-available/

Wednesday July 8th an update for the vWAN reverted the routing table and the notification about "some of the functionality may not be accessible until the week of August 3rd" was put up. I guess Microsoft decided to go on summer vacation and leave the rest of the issues with the vWAN for a month.

Tuesday July 14th we still have outstanding problems with the vWAN. At some point along all of this, we started experiencing degraded network performance between 2 vNETs through the vWAN. Incredibly long response time for our applications to the point of timeouts. We are necessitated to peer the vNETs directly with each other. It should also be noted, that the general latency between 2 vNETs through the vWAN is 2-4ms. 2 directly peered vNETs have <1ms, always. This is confirmed by Microsoft to be expected with the vWAN.

What's my point? Take a chill pill when dealing Azure networking, Microsoft took 20.

Anything positive about the vWAN?

If you don't like money, then it takes care of that for you. Pretty neat. It does provide me an inordinary amount of material for network memes, so that's quite enjoyable.