Azure vWAN static routing and DMZ

The Virtual WAN has been updated with custom routes and it works, but it has too many limitations. It's been a rough time with the vWAN and the update hasn't been what we expected. Working with the vWAN requires using a mix of the web portal, Azure CLI and powershell and can be quite frustrating. It feels like Microsoft expected people to implement it as a backbone for global routing and never touch it again. It's not suited to handle regional networking. But anyway.

Associated documentation for configuring static routing in the vWAN:

https://docs.microsoft.com/en-us/azure/virtual-wan/how-to-virtual-hub-routing

I am in the fortunate situation, that I have the possibility to test all network tools and functions in Azure. This is also how we determined with certainty, that the vWAN is not suited for a production network. My sweet test hub in Azure:

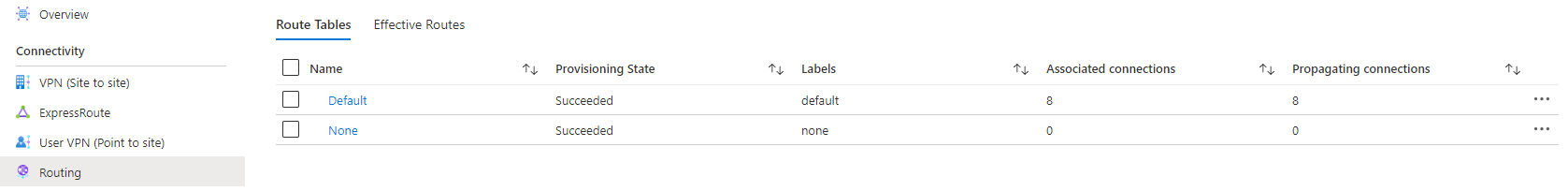

And the hub routing table overview:

Alright. Back in October (current date is January) I tried to add a static route in this test hub. I was met with this error message:

"code": "VirtualNetworkIsAlreadyConnectedToAnotherHub",

"message": "Cannot connect Virtual Network /subscriptions/SUBSCRIPTIONID/resourceGroups/RESOURCEGROUPNAME/providers/Microsoft.Network/virtualNetworks/ANOTHER_HUB_VNETNAME to Virtual Hub /subscriptions/SUBSCRIPTIONID/resourceGroups/RESOURCEGROUPNAME/providers/Microsoft.Network/virtualHubs/VHUB as it is already connected to another Hub Virtual Network /subscriptions/SUBSCRIPTIONID/resourceGroups/RESOURCEGROUPNAME/providers/Microsoft.Network/virtualNetworks/ANOTHER_HUB_VNETNAME."I have changed some lines in the message to remove our resource names, of course :) I spent a couple of reads deciphering what this message meant, because it just seemed wrong.

"message": "Cannot connect Virtual Network /subscriptions/SUBSCRIPTIONID/resourceGroups/RESOURCEGROUPNAME/providers/Microsoft.Network/virtualNetworks/ANOTHER_HUB_VNETNAME to Virtual Hub /subscriptions/SUBSCRIPTIONID/resourceGroups/VirtualHub/providers/Microsoft.Network/virtualHubs/VHUBThe above part of the error is saying that it can't connect a vNET, which is in another hub, to that other hub. Completely unrelated to what I'm trying to do.

as it is already connected to another Hub Virtual Network /subscriptions/SUBSCRIPTIONID/resourceGroups/RESOURCEGROUPNAME/providers/Microsoft.Network/virtualNetworks/ANOTHER_HUB_VNETNAME."Because that unrelated vNET is already connected (peered) to a resource, which is the other hub.

I had a session with Microsoft support and the reaction was "yep, that seems pretty broken". Before Christmas I received feedback, that a fix would be deployed "next year", because this part of the service is too involved to change during holiday. December 2021 is, of course, still within "next year". To Microsoft's credit, their support is responsive and helping as much as possible. It just seems like almost anything network related is a black box that is always solved by someone at the backend.

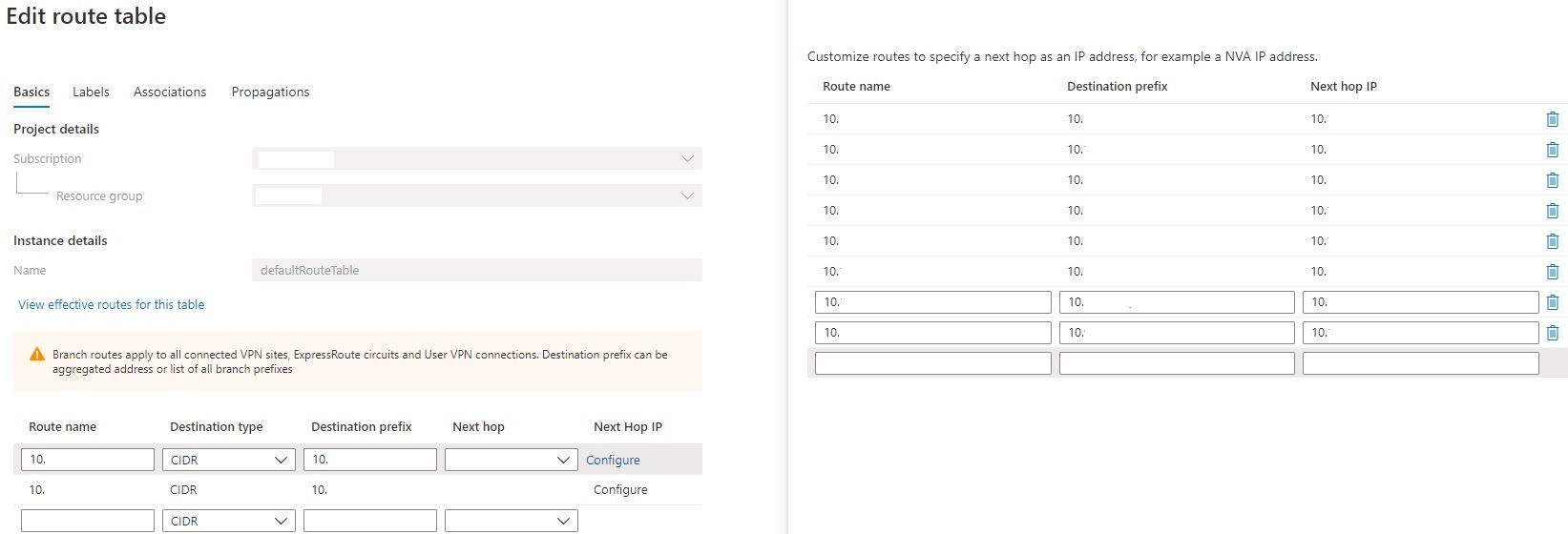

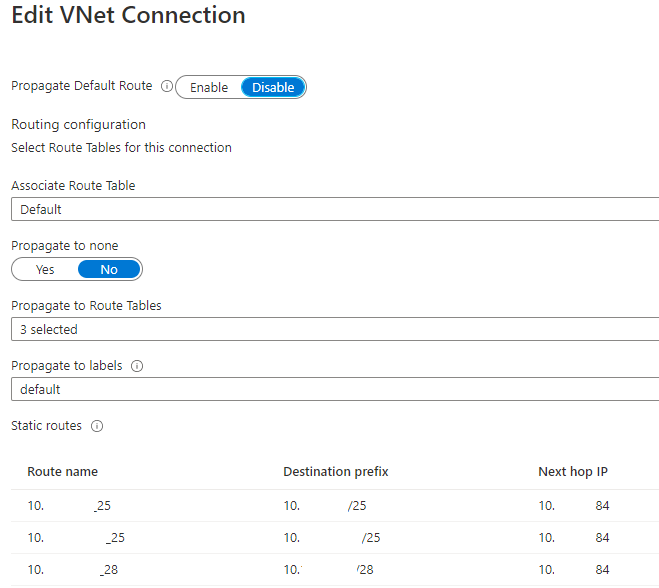

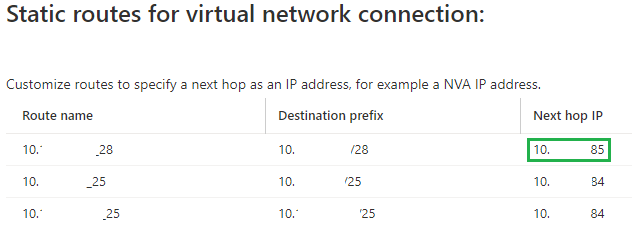

The static routes work in our primary hub and we've successfully used it to fix previously unresolved challenges. Some caveats, though. There are 2 places in the vWAN to configure routes. In the hub routing table and in every vNET connection, by going into "Edit vNET connection":

Using the first option of adding a route to the hub routing table provides:

- A route in the local hub routing table

- Propagation to all vNETs peered with the local hub

- BGP propagation to gateways (VPN, ExpressRoute) configured to the local hub.

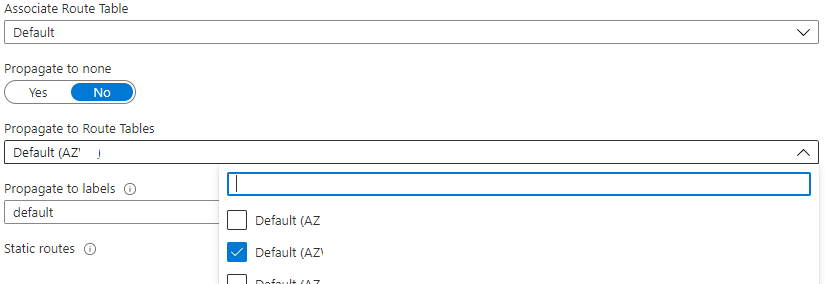

It does not propagate to other hubs in the vWAN, which is a strange limitation. Using the second option with the vNET routing table provides these nifty settings:

"Propagate to Route Tables", which are the tables of the other available hubs. It'd be a fair assumption, that adding a route here and checking the boxes for propagation, would have the route be available in other hubs, but that's not the case. To make a static route available in the other hubs, you have to add it to each local hub routing table. There's actually a well documented article from Microsoft about this.

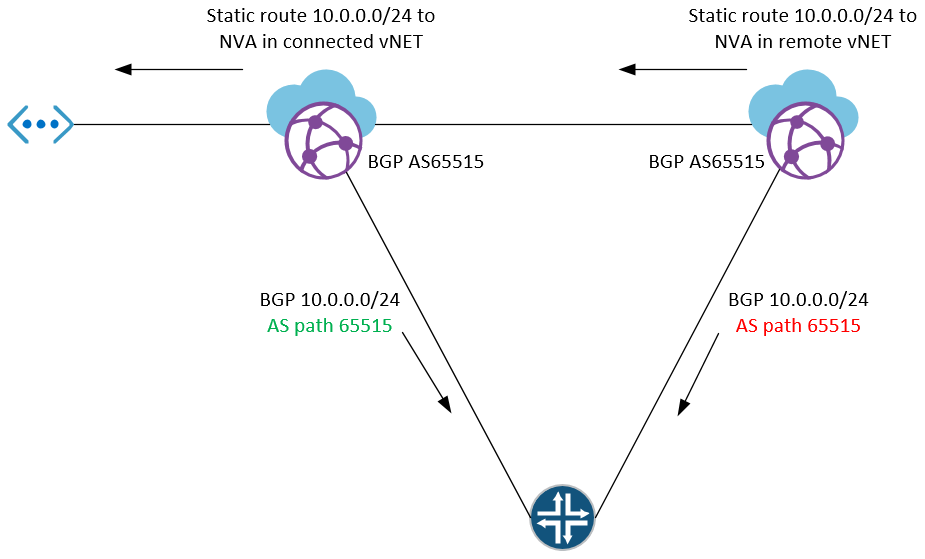

I'm just left wondering why it has to be like this. It seems so unnecessary and poses a slight BGP challenge. Adding the route to a hub makes it propagate from that hub. Adding it to another hub makes the route propagate from that other hub. Both routes will be propagating as if the advertising hub is the origin. No specific community to match on either, which would have been decent enough. Manually fix your inbound BGP policies to these networks and watch out for increased latency, I guess?

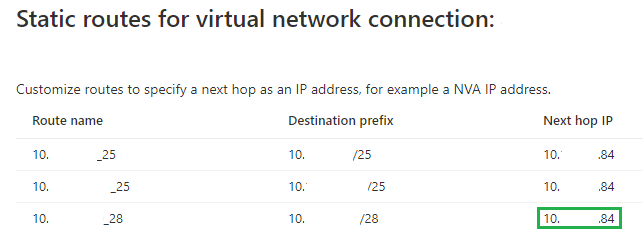

It took me a while to learn what the purpose of the vNET route table is. Right now, it's limited, but it's supposed to be a smarter way to configure routes in the future, when more vWAN features release. Adding a route to the vNET table does nothing in regards to routing. Adding a route to the vNET table and choosing propagation:

What the propagation means, is "configuration" propagation. When a route is added in the vNET table it'll automatically be available for configuration in the hub route table:

When I want to add the same route in a hub, the configuration is inherited from the vNET table configuration. This also means, if I change the route in the vNET table, it'll change in all hubs, which have inherited the propagation. For example, if I change the next hop for a route in the vNET table, it'll change in the hubs:

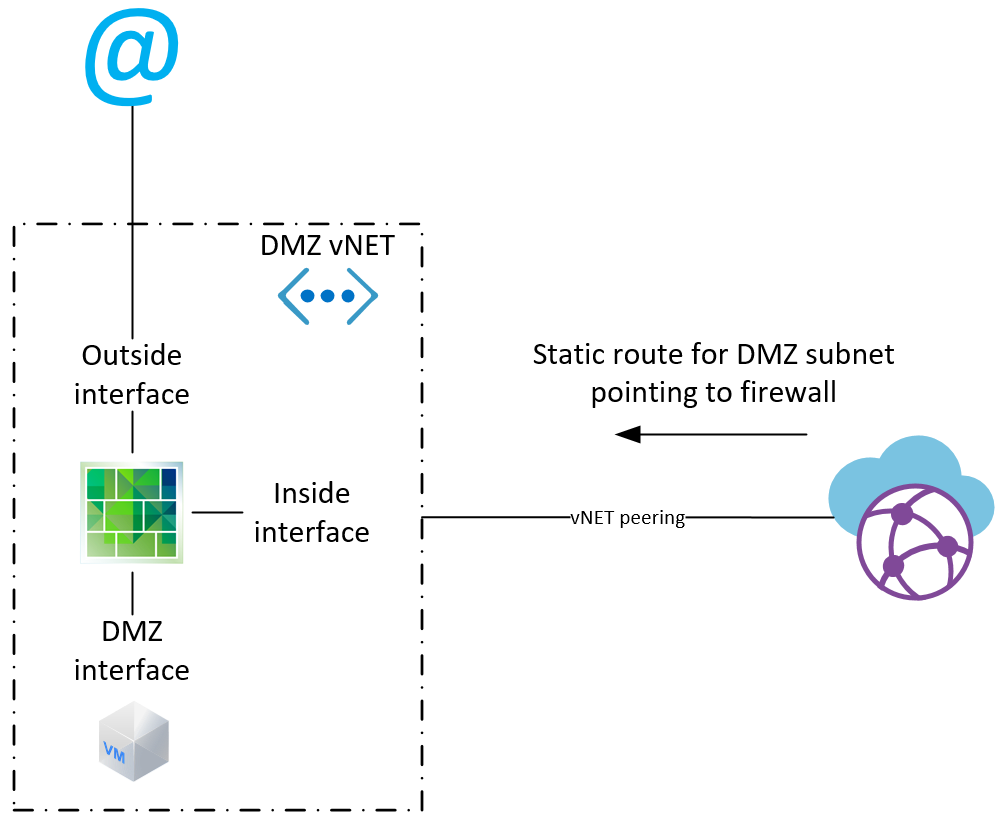

Building a DMZ in Azure

By now Azure is providing a lot options and tools in regards to network services, but we are still circling back to a traditional DMZ with an NVA. Some requirements are simply not met with native Azure services. The design isn't fancy and there are too many constraints introduced by Azure to provide any real flexibility.

Constraints:

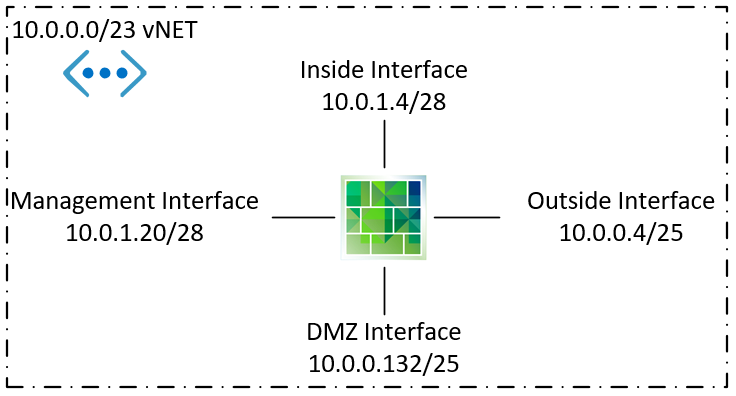

- Most VMs in Azure only support 4 NICs. The default VM size for a Palo Alto VM-100 is a D3, which has more than enough resources, but only 4 interfaces. A VM type supporting 8 NICs has twice the monthly cost. The design has 3 NICs visible and the 4th NIC is used for the management port. The result is a fixed size DMZ in terms of amount of IP addresses. If the scope is saturated, I'd deploy another vNET and a new firewall.

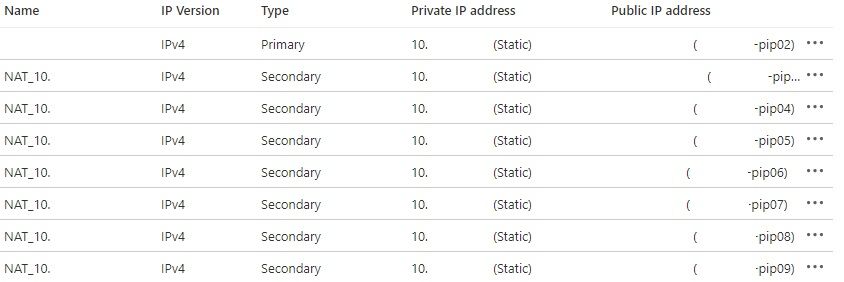

- Public IP addresses for the VMs with firewall static 1-1 NAT. A VM NIC in azure can be assigned a public IP address. The public address is automatically translated by Azure to the internal IP of the NIC. From the firewall interface configuration perspective:

When we assign a public IP to the Outside NIC, Azure will NAT 10.0.0.4 to the public address. How it looks from Azure VM NIC perspective:

For public IP I assign another address to the Outside NIC as secondary address. The secondary address also requires an internal address, which is why the Outside interface has such a big subnet. A static NAT works like:

10.0.0.133 Firewall NAT > 10.0.0.5 Azure NAT > Public IP

10.0.0.5 would be a secondary IP on the Outside NIC.

- Network redundancy. It's the cloud, no need for this, right ;) Create the redundancy by deploying the same environment in another region. No HA firewall setup in same region. It's up to the application/server to be able to fail over. The public IP addresses can't be moved or floated between regions.

At this point it seems hard to argue a case for deploying a DMZ using an NVA, but making a VM available to the internet is done using either an Azure front end service or static public IP. Not every solution is suited to use an Azure front service and adding a public IP directly to a VM is not a good idea. NSG and logging features are simply not good enough.

VM config in DMZ

A couple of items have to be configured for the DMZ VMs.

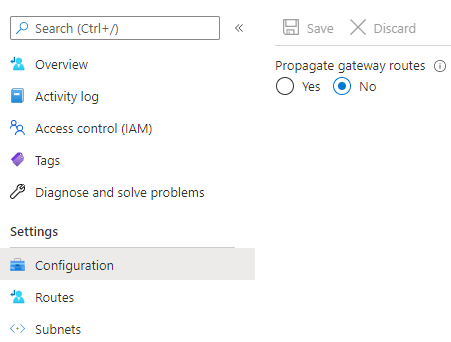

- A route table has to be configured and applied to the DMZ subnet. Make sure to change the propagation of gateway routes to the subnet:

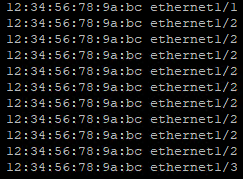

Then create an entry with 0.0.0.0/0 > DMZ FW Interface IP. The VM route table will still contain a local subnet entry, which is the same as we'd expect from a traditional DMZ VLAN and ARP. Except everything is proxy ARP and everything is on MAC 123456789abc.

It would have been pretty neat, if the route table feature allowed to functionally force VM traffic through an NVA for the same subnet. This would allow creating a micro segmented subnet. The intra subnet traffic can forced to route to the NVA and the NVA can process it, but afterwards it gets dropped. Likely as a result of the underlying VXLAN implementation.

An NSG can be configured to block intra subnet traffic in the vNET, but I find it to be a lacking solution. It'd be the same as deploying network access-lists that blocks all intra VLAN traffic in the same way ACI or NSX would. Except without any intelligence, management platform or automation, which we'd have to make ourselves. Using ASG can be considered, but none of these options are where Microsoft is spending much development time.

- Azure policies to disable changes to NICs and disable adding a public IP to VMs directly. While everyone should be able to deploy the VM resources they need, they should not be able to change any network related settings in this kind of environment. Otherwise the DMZ could easily be circumvented by for example removing the route table.

That's it. Happy struggles.